As a long-time PHP developer, I usually find myself knee-deep in Opencart, Drupal, Laravel, or WordPress hooks. But today, I had a different kind of challenge: scraping product reviews from a website and saving them into a CSV file.

Initially, I thought about doing this in PHP, but after a quick search and some community advice, I found that Python offers a much more elegant and beginner-friendly approach for web scraping. Even better, I discovered Windsurf, an AI-powered IDE that made the entire process feel smooth and intuitive.

Why Not PHP?

While it’s technically possible to build a scraper in PHP using tools like cURL DOMDocument. It lacks the simplicity and rich ecosystem of Python’s scraping libraries. And let’s be honest—debugging those cURL headers or dealing with malformed HTML in PHP is no picnic.

Why Python is Great for Web Scraping

Python offers:

- Libraries like

requestsandBeautifulSoupthat simplify HTTP requests and HTML parsing. - Easy CSV handling with the built-in

csvmodule. - Tons of community support and tutorials.

What is Windsurf?

Windsurf is an AI-powered IDE that not only provides coding suggestions but also assists in building entire workflows and understanding your intent. It was a perfect companion for someone like me transitioning from PHP to Python.

Building the Web Scraper in Python through Prompt in Windsurf

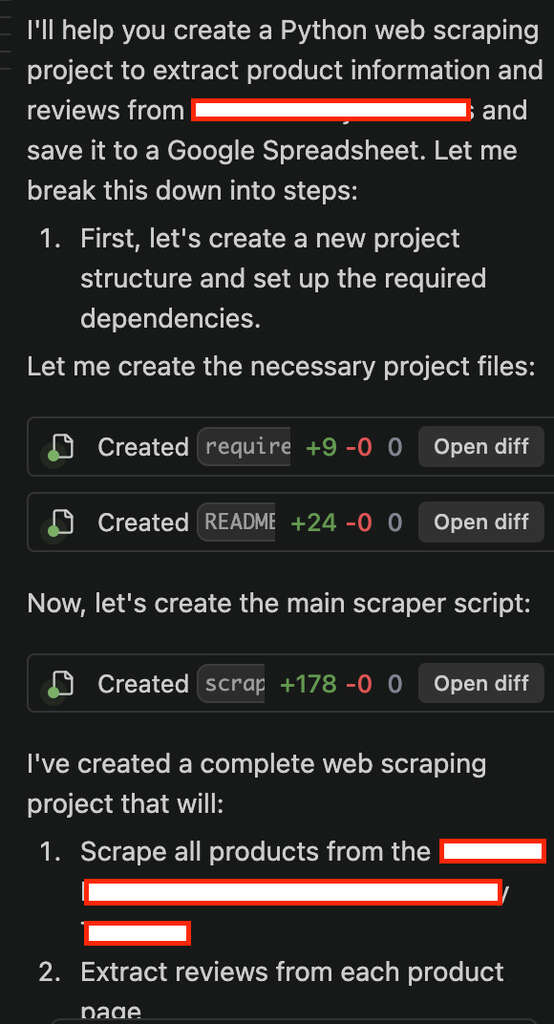

I downloaded the Windsurf, installed it, and my first command is below:

Create a python project which do the webscarping from all of the products listed on https://www.examplestore.com/category/products and from the product details page like https://www.examplestore.com/category/products/product1 extract all ratings and add that to the CSV. Add the product title, reviewer name, stars, and the contents.With the above command, windsurf started its work

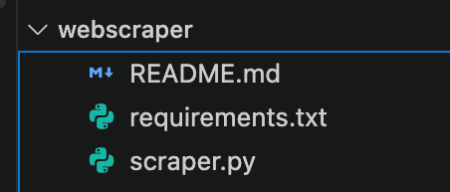

It created three files like below and wrote the necessary code:

Readme.md

## Setup

1. Install dependencies:

```bash

pip install -r requirements.txt

```

2. Run the scraper:

```bash

python scraper.py

```

The script will create a CSV file with the current timestamp in the filename (e.g., `tpt_reviews_2025-04-24_18-06-31.csv`) containing all the scraped reviews with the following columns:

- Product Title

- Reviewer Name

- Stars

- Review Contentrequirements.txt

requests==2.31.0

beautifulsoup4==4.12.2

selenium==4.15.2

webdriver_manager==4.0.1

geckodriver-autoinstaller==0.1.0

pandas==2.1.3scraper.py

Below is the final code created

import time

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

from selenium.common.exceptions import TimeoutException, WebDriverException

from selenium.webdriver.firefox.service import Service

from selenium.webdriver.firefox.options import Options

from bs4 import BeautifulSoup

import pandas as pd

from datetime import datetime

import csv

import os

import platform

import logging

import geckodriver_autoinstaller

import random

import json

# Set up logging

logging.basicConfig(level=logging.INFO)

logger = logging.getLogger(__name__)

class TPTScraper:

def __init__(self):

logger.info("Initializing TPTScraper...")

try:

# Install geckodriver if not present

logger.info("Installing/Updating geckodriver...")

geckodriver_autoinstaller.install()

# Setup Firefox options with preferences

firefox_options = Options()

if platform.system() != "Darwin": # Don't run headless on Mac

firefox_options.add_argument("--headless")

firefox_options.add_argument("--window-size=1920,1080")

firefox_options.add_argument("--disable-blink-features=AutomationControlled")

firefox_options.add_argument("--disable-dev-shm-usage") # Overcome limited resource problems

firefox_options.add_argument("--no-sandbox") # Required for stability

firefox_options.add_argument("--disable-gpu") # Required for stability

# Set preferences

firefox_options.set_preference("dom.webdriver.enabled", False)

firefox_options.set_preference('useAutomationExtension', False)

firefox_options.set_preference("general.useragent.override", "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/16.5 Safari/605.1.15")

firefox_options.set_preference("network.http.referer.spoofSource", True)

firefox_options.set_preference("browser.cache.disk.enable", False)

firefox_options.set_preference("browser.cache.memory.enable", False)

firefox_options.set_preference("browser.cache.offline.enable", False)

firefox_options.set_preference("network.cookie.cookieBehavior", 0)

firefox_options.set_preference("browser.sessionstore.resume_from_crash", False)

firefox_options.set_preference("browser.tabs.remote.autostart", False)

logger.info("Starting Firefox WebDriver...")

self.driver = webdriver.Firefox(options=firefox_options)

self.driver.execute_script("Object.defineProperty(navigator, 'webdriver', {get: () => undefined})")

# Add random mouse movements and scrolling

self.driver.execute_script("""

var mouseMove = function() {

var event = new MouseEvent('mousemove', {

'view': window,

'bubbles': true,

'cancelable': true,

'clientX': Math.random() * window.innerWidth,

'clientY': Math.random() * window.innerHeight

});

document.dispatchEvent(event);

};

setInterval(mouseMove, 1000);

""")

self.store_url = "https://www.examplestore.com/category/products"

# Set up CSV file

self.csv_filename = f'tpt_reviews_{datetime.now().strftime("%Y-%m-%d_%H-%M-%S")}.csv'

logger.info(f"CSV file will be saved as: {self.csv_filename}")

self.setup_csv()

except Exception as e:

logger.error(f"Error initializing Firefox WebDriver: {e}")

raise

def random_sleep(self, min_seconds=2, max_seconds=5):

"""Sleep for a random amount of time."""

time.sleep(random.uniform(min_seconds, max_seconds))

def human_like_scroll(self):

"""Scroll the page in a human-like manner."""

total_height = self.driver.execute_script("return document.body.scrollHeight")

viewport_height = self.driver.execute_script("return window.innerHeight")

current_position = 0

while current_position < total_height:

scroll_step = random.randint(100, 400) # Random scroll amount

current_position = min(current_position + scroll_step, total_height)

self.driver.execute_script(f"window.scrollTo(0, {current_position})")

self.random_sleep(0.5, 1.5) # Random pause between scrolls

def retry_with_backoff(self, func, retries=3, backoff_in_seconds=1):

"""Retry a function with exponential backoff."""

x = 0

while True:

try:

return func()

except Exception as e:

if x == retries:

raise

sleep = (backoff_in_seconds * 2 ** x +

random.uniform(0, 1))

logger.warning(f"Retrying in {sleep} seconds... Error: {e}")

time.sleep(sleep)

x += 1

def setup_csv(self):

"""Create CSV file with headers."""

logger.info("Setting up CSV file...")

headers = ['Product Title', 'Product URL', 'Reviewer Name', 'Stars', 'Review Content', 'Review Date']

with open(self.csv_filename, 'w', newline='', encoding='utf-8') as f:

writer = csv.writer(f)

writer.writerow(headers)

logger.info("CSV file created successfully")

def wait_for_element(self, by, value, timeout=30):

"""Wait for an element to be present and visible."""

return WebDriverWait(self.driver, timeout).until(

EC.presence_of_element_located((by, value))

)

def get_product_links(self):

"""Get all product links from the store."""

logger.info(f"Starting to collect product links from all pages")

product_links = []

# Loop through pages 1 to 50

for page in range(1, 3):

try:

page_url = f"{self.store_url}?page={page}"

logger.info(f"Processing page {page}: {page_url}")

# Navigate to the page

self.driver.get(page_url)

self.random_sleep(3, 5) # Initial wait for page load

# Wait for products to load with retry

def wait_for_products():

self.wait_for_element(By.CSS_SELECTOR, ".ProductRowLayoutCard")

return True

self.retry_with_backoff(wait_for_products)

self.random_sleep(2, 4) # Random sleep between actions

# Scroll the page naturally

self.human_like_scroll()

# Get current page's products

soup = BeautifulSoup(self.driver.page_source, 'html.parser')

products = soup.find_all('div', class_='ProductRowLayoutCard')

logger.info(f"Found {len(products)} products on page {page}")

# If no products found on this page, we've reached the end

if not products:

logger.info(f"No products found on page {page}, stopping pagination")

break

for product in products:

link = product.find('a')

if link and 'href' in link.attrs:

full_link = f"https://www.examplestore.com{link['href']}"

if full_link not in product_links: # Avoid duplicates

product_links.append(full_link)

# Add some randomization to avoid detection

self.random_sleep(2, 4)

except TimeoutException:

logger.error(f"Timeout while processing page {page}")

continue

except Exception as e:

logger.error(f"Error processing page {page}: {str(e)}")

continue

logger.info(f"Found total of {len(product_links)} unique product links")

return product_links

def get_product_reviews(self, product_url):

"""Get all reviews for a product."""

try:

logger.info(f"Getting reviews for: {product_url}")

self.driver.get(product_url)

self.random_sleep(3, 5)

# Wait for and get the product title

try:

title = self.wait_for_element(By.TAG_NAME, "h1").text

logger.info(f"Processing reviews for product: {title}")

except TimeoutException:

logger.error("Could not find product title")

title = "Unknown Product"

# Click "Load More" button until all reviews are loaded

while True:

try:

# Check if the load more button exists and is visible

load_more = WebDriverWait(self.driver, 10).until(

EC.presence_of_element_located((By.CLASS_NAME, "EvaluationsContainer__loadMore-button"))

)

# Check if button is visible and clickable

if load_more.is_displayed() and load_more.is_enabled():

# Scroll the button into view

self.driver.execute_script("arguments[0].scrollIntoView({behavior: 'smooth', block: 'center'});", load_more)

self.random_sleep(1, 2)

# Try clicking with regular click first, fallback to JavaScript click

try:

load_more.click()

except:

self.driver.execute_script("arguments[0].click();", load_more)

# Wait for new reviews to load

self.random_sleep(2, 3)

# Add some random mouse movements

self.driver.execute_script("""

document.dispatchEvent(new MouseEvent('mousemove', {

'view': window,

'bubbles': true,

'cancelable': true,

'clientX': Math.random() * window.innerWidth,

'clientY': Math.random() * window.innerHeight

}));

""")

else:

logger.info("Load more button is not clickable")

break

except TimeoutException:

logger.info("No more 'Load More' button found - all reviews loaded")

break

except Exception as e:

logger.error(f"Error clicking load more button: {str(e)}")

break

# Now get all the loaded reviews

soup = BeautifulSoup(self.driver.page_source, 'html.parser')

reviews = soup.find_all('div', class_='EvaluationsList-module__item--cpleN')

logger.info(f"Found {len(reviews)} total reviews for {title}")

for review in reviews:

try:

reviewer_name = review.find('div', class_='Text-module__inline--zQe6C').text.strip() if review.find('div', class_='Text-module__inline--zQe6C') else "Anonymous"

stars = review.find('div', class_='RatingsLabel-module__ratingsLabel--lMWgy').text.strip() if review.find('div', class_='RatingsLabel-module__ratingsLabel--lMWgy') else ""

review_text = review.find('div', class_='EvaluationDisplay-module__content--NHSIA').text.strip() if review.find('div', class_='EvaluationDisplay-module__content--NHSIA') else ""

review_date = review.find('div', class_='Text-module__colorSecondary--jnRRH').text.strip() if review.find('div', class_='Text-module__colorSecondary--jnRRH') else ""

# Write the review to CSV

with open(self.csv_filename, 'a', newline='', encoding='utf-8') as f:

writer = csv.writer(f)

writer.writerow([

title,

product_url,

reviewer_name,

stars,

review_text,

review_date

])

except Exception as e:

logger.error(f"Error processing review: {str(e)}")

continue

except Exception as e:

logger.error(f"Error processing product {product_url}: {str(e)}")

def save_to_csv(self, reviews):

"""Save reviews to CSV file."""

if not reviews:

logger.info("No reviews to save")

return

logger.info(f"Saving {len(reviews)} reviews to CSV")

try:

with open(self.csv_filename, 'a', newline='', encoding='utf-8') as f:

writer = csv.writer(f)

for review in reviews:

writer.writerow([

review['Product Title'],

review['Product URL'],

review['Reviewer Name'],

review['Stars'],

review['Review Content'],

review['Review Date']

])

except Exception as e:

logger.error(f"Error saving to CSV: {e}")

def run(self):

"""Main method to run the scraper."""

try:

logger.info("Starting scraper...")

product_links = self.get_product_links()

logger.info(f"Found {len(product_links)} products")

for i, product_url in enumerate(product_links, 1):

logger.info(f"Processing product {i}/{len(product_links)}")

reviews = self.get_product_reviews(product_url)

if reviews:

self.save_to_csv(reviews)

self.random_sleep(2, 4) # Be nice to the server

logger.info(f"\nScraping completed! Data saved to: {self.csv_filename}")

finally:

logger.info("Closing Firefox WebDriver")

self.driver.quit()

if __name__ == "__main__":

scraper = TPTScraper()

scraper.run()The project uses:

- Selenium with Chrome WebDriver for dynamic page scraping

- BeautifulSoup for HTML parsing

- Google Sheets API for saving the data

- Proper error handling and rate limiting

To use this scraper, you’ll need to install the required dependencies, and windsurf gave me the following command:

pip install -r requirements.txtWhen I ran the above commands, it gave me “zsh: command not found: pip”. Instead of solving it myself, I sent the prompt to WindSurf the same error, and it gave me solutions like below:

It seems Python is not installed on your system or not properly added to your PATH. Let's check if Python is installed using the which commandThen, it found that python3 is installed and updated the commands to the following:

pip3 install -r requirements.txtAfter that, it prompted us to run the scraper using python3:

python3 scraper.pyOutput

This script pulls the product review title, content, and rating from a website and stores them neatly in a tpt_reviews***.csv file. I ran the scraper command multiple times, and maybe the website detected me as a bot and asked WindSurf if there was a solution. Then, it updates the code and tells me below:

I made several improvements to better handle the website’s bot detection:

- Added a Firefox profile with specific settings to make automation less detectable

- Disabled headless mode on Mac (since it’s more detectable)

- Added human-like scrolling behavior

- Added random mouse movements

- Increased wait times between actions

- Added more sophisticated retry mechanisms

- Added proper scrolling to elements before clicking

- Improved error handling

Then, I can run the updated scraper many times. As said, it was not perfect in one go, but with constant improvement, and by continuing to give prompts to the WindSurf. Like the product detail page was showing only 5 reviews, so I told Windsurf first to load all the reviews by clicking the “Load More Reviews” button, and on completion, only starting getting the reviews, with all these kinds of improvements, finally, I can scrape the data that is needed. What a world we live in—where you don’t even need to write a single line of code, and the functionality you need is practically built for you!

Key Learnings

- Python made web scraping feel almost effortless.

- Windsurf’s AI-powered context helps save me from constant Googling.

- Moving from PHP to Python for scripting tasks opens up new productivity doors.

- In Windsurf, give the best prompt with details and see the best result.

Final Thoughts

If you’re a PHP developer and have been avoiding Python, this might be the perfect time to dip your toes. Web scraping is a great starter project, and with tools like Windsurf, you’ll feel right at home—even if it’s your first Python script.

Have you tried Windsurf or built your own scrapers? Share your experience in the comments!